Von Eigenes Werk, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=1923693

Consider the following setup. You provide space to store containers and your customers have, after bringing the containers to you, the flexibility to retrieve them whenever they like. In order to not waste space you stack containers onto each other leaving you with a fundamental question: which container should be on top and which at the bottom? It is clear that you want „fast“ containers, i.e. containers that will probably be retrieved in near future, should not be stored under a „slow“ container, because then you will have to rearrange the containers in order to get access to the fast one. The key question hence is, how to find out in advance, which containers will be slow and fast.

To address this problem we applied maching learning: We were given a historical dataset with information about the containers such as where they came from or whether it contained products that needed cooling.

This information allowed us to categorize the containers in term of time-tresholds. We predict whether a container will stay longer or shorter than the treshold.

We tried different basic algorithms such as DecisionTree, GaussianNaiveBayes or K-Nearest-Neighbours and using these approaches we could already yield very good results. As this was my first encounter with Machine Learning I was quite impressed by its capability. Nevertheless I am sure that there are much more sophisticated algorithms and techniques that can enhance the accuracy of the predictions made by the algorithm.

To go a little more into detail I will describe a problem to you that we faced. First of all it is important to mention, that the algorithms we use are based on computing distances. In order to enable this we need the values of each feature to be numerical. Since the information about the containers where mainly given in categories such as „containerType“ with values A,…,G the first idea was to simply map them to integer values 1,…,7. The problem with this is that you implicitely would have encoded more structure onto the containers than what was initially there: you say that „containerType_A“ is further away of „containerType_D“ than of „containerType_B“, because the distance of one and four is greater than the distance of one and two. Of course that is not true and hence you have to be careful not to loose the symmetry inherent in the data. Otherwise the algorithm, based in computing distances, will use this „order“ yielding results that will probably differ from reality.

To get around this problem you artificially blow up the number of features of a container: instead of just one dimension for the feature „containerType“ you create seven features namely „containerType_A, …, containerType_G“. Then, for a container that originally had the feature „containerType“ being equal to „A“, you encode this information by setting the feature „containerType_A“ to one and all the other containerType features to zero. In other words: the new features act as indicatorfunctions for what type of container we have.

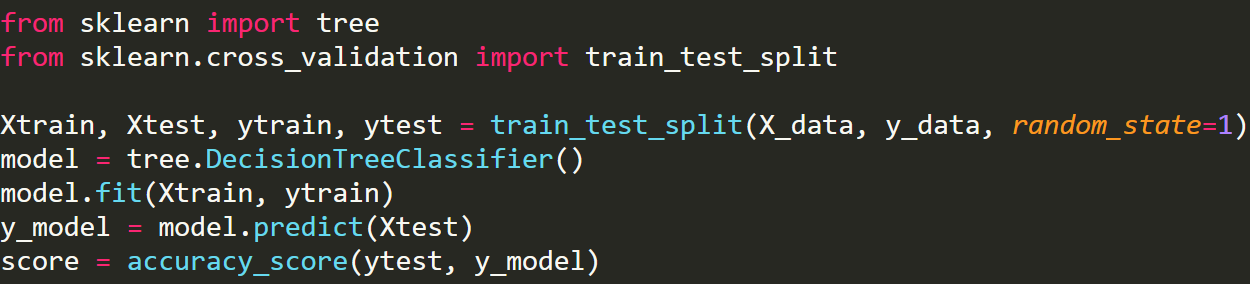

As I have mentioned before most of our information had this form leaving us eventually with a space of 1182 dimenions on which we could then apply our algorithm. Luckily, using python libraries, it is very easy to use the algorithms mentioned above:

You need your data to have the form of a (feature-)matrix (X_data) and a vector with the label on which you train and which afterwards you want to predict (y_data).

Then, as a first step you split your data into a training- and a testset. This allows you to check how your algorithm performs. You use the training data to (surprise) train your model. Afterwards you use your model to predict on the testdata and measure your performance using the accuracy_score. The last step can of course be replaced with any other measure dependent on what is most important to you.

The take-home message here is, that, once your data is „clean“, i.e. has the right form, no NaN-values, etc…, the actual application of a basic Algorithm, which can already give you a good intuition whether information can be extracted from the data using Maching Learning, is very easy and does not require a horrific amount of coding. This first prototype for containerclassification were merely 60 lines of code, which showed the potential gain of maching learning in this setup.