I recently had the opportunity to visit the Amazon IoT Hackathon in Munich. I did expect a lot from the event and I was not disappointed. But more on the event later, lets start with a brief introduction to the AWS IoT cloud.

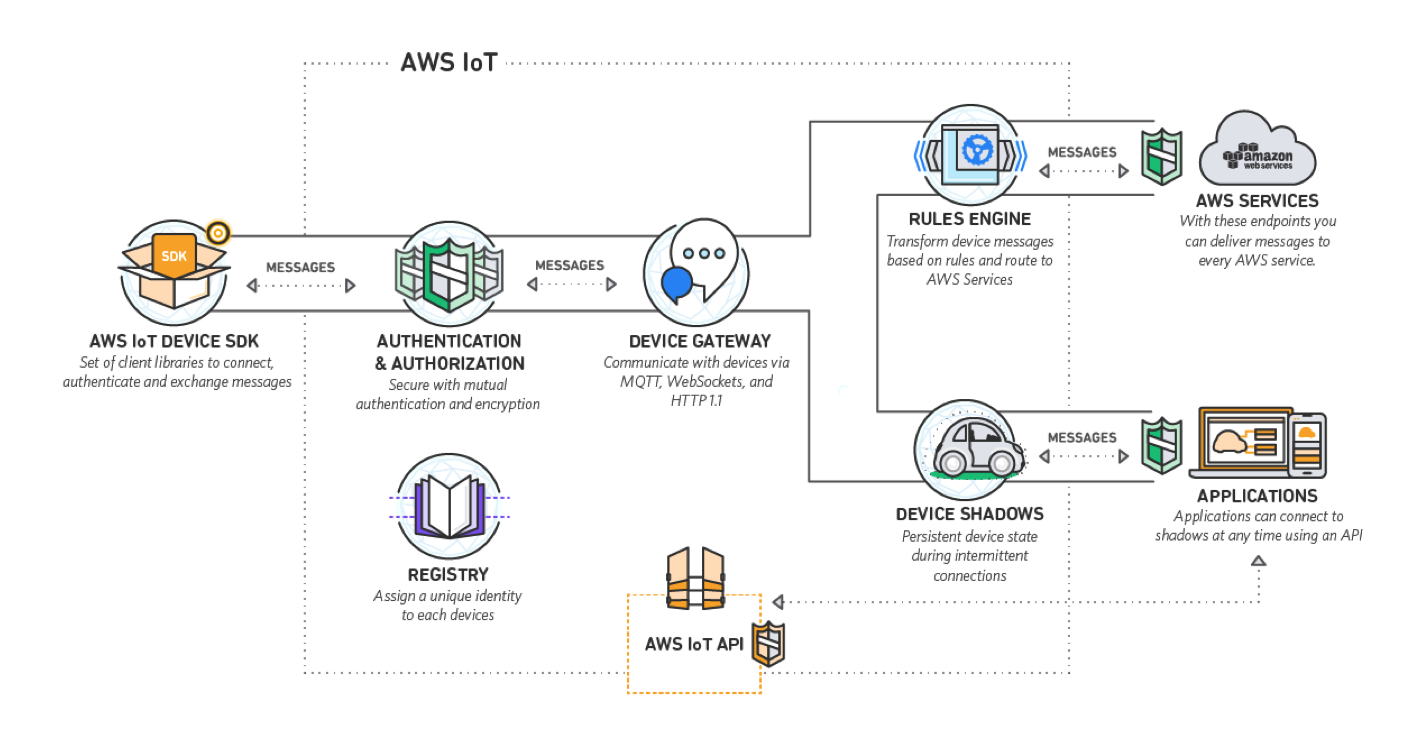

Amazon released its IoT Cloud at the very end of last year. Its main purpose is to handle device management and communication from and to the devices (aka „Things“). Its architecture is shown in the next Figure.

The communication between the devices and the cloud is primarly based on the MQTT protocol. The devices publish state changes and custom events to MQTT topics and can subscribe to another topic so that they can be notified by the cloud in case of desired state changes (more on this later).

Before the device first communicates with the cloud, a certificate needs to be created for the device. Furthermore, a policy needs to be attached to the certificate, defining the rights of the device (enabling it to post messages to topics or to subscribe to topics). A policy for testing IoT applications could be like:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [ "iot:*" ],

"Resource": [ "*" ]

}

]

}

Here we see the first integration within the Amazon Cloud: policies are standard IAM policies as known from AWS Identity and Access Management.

Speaking of integration with other Amazon products: rules can be defined within the AWS IoT cloud, which can then forward the message to various other Amazon Cloud products, including S3 for storing the message, Kinesis for stream processing, Lambda for executing custom code on receipt of the message, etc. Rules have an SQL syntax like this:

SELECT JSonAttributes FROM MQTT topics WHERE FilterConditions based on the JSonAttributes

so if you have a device submitting temperature values every second to the topic tempSensorData and you want to be notified via email if the temperature is above 40 degrees, you can create a rule

SELECT temp FROM tempSensorData WHERE temp > 40

and configure an action to send a message to SNS (simple notification service) which will send out the mail.

As you might have noted, however, rules only act on single messages, if aggregation of messages is required, the messages need to be forwarded to Kinesis and the resulting stream needs to be processed.

Devices can also have state information – for example an LED can send its current color to the cloud. This information can be stored in device shadows. These device shadows are always available, even if the real device is not connected, so other applications can read the last state of the device. Applications can also update the state of the device shadow (asking the LED to turn green, for example). A state is always made up of a desired and a reported value.

You can change the desired value in the cloud and this change will be propagated to the real device – the message containing the delta to the previous state is sent to an MQTT topic the device has subscribed to. Once the device has completed the state change, it will report back the current state in the reported attribute. From this moment on, the cloud will know that the change is processed by the device and the device shadow is in sync again. So if you want to change the color of the LED to green, you can change the attribute of the device shadow to

{

"desired": {"color":"green"},

"reported": {"color":"red"}

}Once the color is set by the device, it will report back:

{

"desired": null,

"reported": {"color":"green"}

}There is also a registry component for storing metadata about the devices – device location or manufacturer for example. This information can be used to query a set of devices – for example find all the devices in Munich.

You can do all these activities from the AWS Console but Amazon has also built an API do administrative stuff programmatically (if the appropriate policies enable you to do these). In order to speed up connecting devices to the cloud, Amazon has also released client SDKs which you can use on your device to connect it to the cloud – currently C, JavaScript and Arduino Yܺn libraries are available.

Now lets back to the event itself:

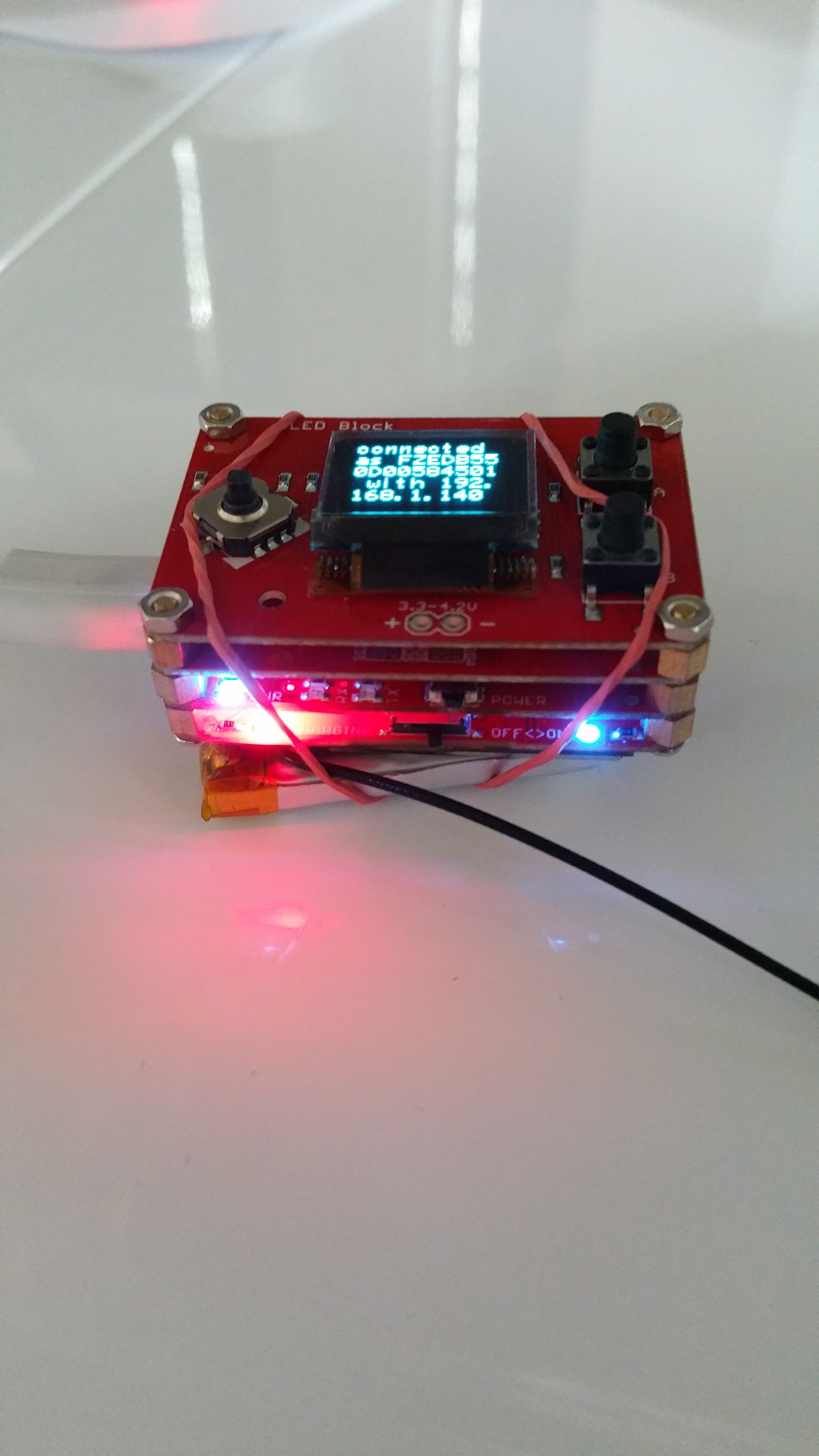

we started with some presentations where the main concepts of AWS IoT were explained. We also had a short introduction from Intel for the hardware part of the Hackathon. Later on, we started the more interesting part: we have formed teams and each team has received an Intel Edison board with some sensors and a small display attached.

Each team had to build something with the Edison and the AWS products. Time limit was ca. 4 hours. A sample code was provided, which read out all sensor values and sent them to the cloud every second. In the end, each team had to present their idea and vote for the best idea/solution presented.

Our team members had little to no experience with AWS products so it was quite a challenge for us to create something useful within this short time period. We wanted to build something which not only sends data to the cloud but also receives data from it (in order to test the communication in both directions). After a short discussion, we had the idea: we want to build a barkeeper training application.

Our idea was: the Edison is attached to a shaker and with the help of the accelerometer the power of shaking is measured and submitted to the cloud. The values are aggregated there and after a minute a text is sent to display of the trainer (in our case, the display on the Edison) if the shaker was shaked correctly. A button on the bottom of the shaker (in our case, on the Edison) should notice that the shaker is put back on the table and reset the text message.

Creating a certificate, installing it and starting the Edison was an easy task. Sensor messages started arriving in the cloud immediately. Creating a rule and forwarding the messages to Kinesis (for aggregating them) was also no problem.

For the aggregation itself we did not find a solution in the small time window. Apparently this is not so easy to do with the current Cloud offering of Amazon, custom code is inevitable here. Amazon has, however, already noticed that there is a missing component here and started developing Kinesis Analytics which should simplify the aggregation of events in the future.

Instead of analysing the stream directly, we have created a Kinesis Firehose rule to buffer events for a minute, then write the aggregated data to S3 in a csv format. Setting up a trigger in S3 which would fire if a new file has been written and start a Lambda script for calculating if the shaking was OK was very straightforward as well.

This way, we have evaluated if the shaker was shaked enough for a minute (although we could not use a sliding window for evaluating data of the last minute because of the lack of stream processing). Next up was to send back a text message to the device. For that, we needed to update the device shadow. It took us quite some time to figure out how to use the SDK to do that but in the end we suceeded in creating a Lambda script updating the device shadow, thus sending data back to the device.

The last task was also an easy one, reacting on a button push to invoke the previously created Lambda script and send the „reset“ text. For this, we have created another rule, which invoked Lambda.

It was interesting to see how easy it is to start with the AWS IoT cloud and configure the first rules. We have noticed that if you can use AWS built-in integrations (from which you do have quite some), you have an easy job configuring your integration. Once you need something more complex, you can use custom code (for example in a Lambda script) but for that some practice and knowledge of the AWS APIs is of advantage. What we really missed was an easy way to aggregate IoT messages and react on the aggregated results – but, as said earlier, Amazon is working on this already.

All in all, it was great to see how multiple AWS components work seamlessly together and how easy it is to start working with AWS. At the end of the day, our solution was voted 2nd of ca. 15 teams, we just cannot complain about this result!

Summarizing my impressions:

- Amazon AWS (and the IoT Cloud) is easy to start with, you can set up sample integration within minutes.

- For more complex integrations custom code is needed. Experience is very helpful if you are planning to do that.

- There is a huge list of available Amazon Cloud products, with lots of different cost structures. In order to find the best combination of products for your integration needs, some experience is needed, too.

- In one sentence: Amazon AWS is easy to start with, challenging to master.

1 Kommentar

Hallo, can you share the code or some link related to your article.